Real-time RGB-D based People Detection and Tracking for Mobile Robots and Head-worn Cameras

Omid Hosseini Jafari, Dennis Mitzel, Bastian Leibe

RWTH Aachen University

in ICRA 2014

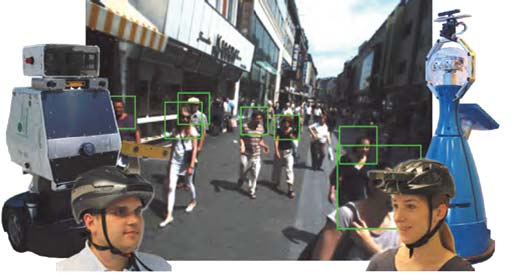

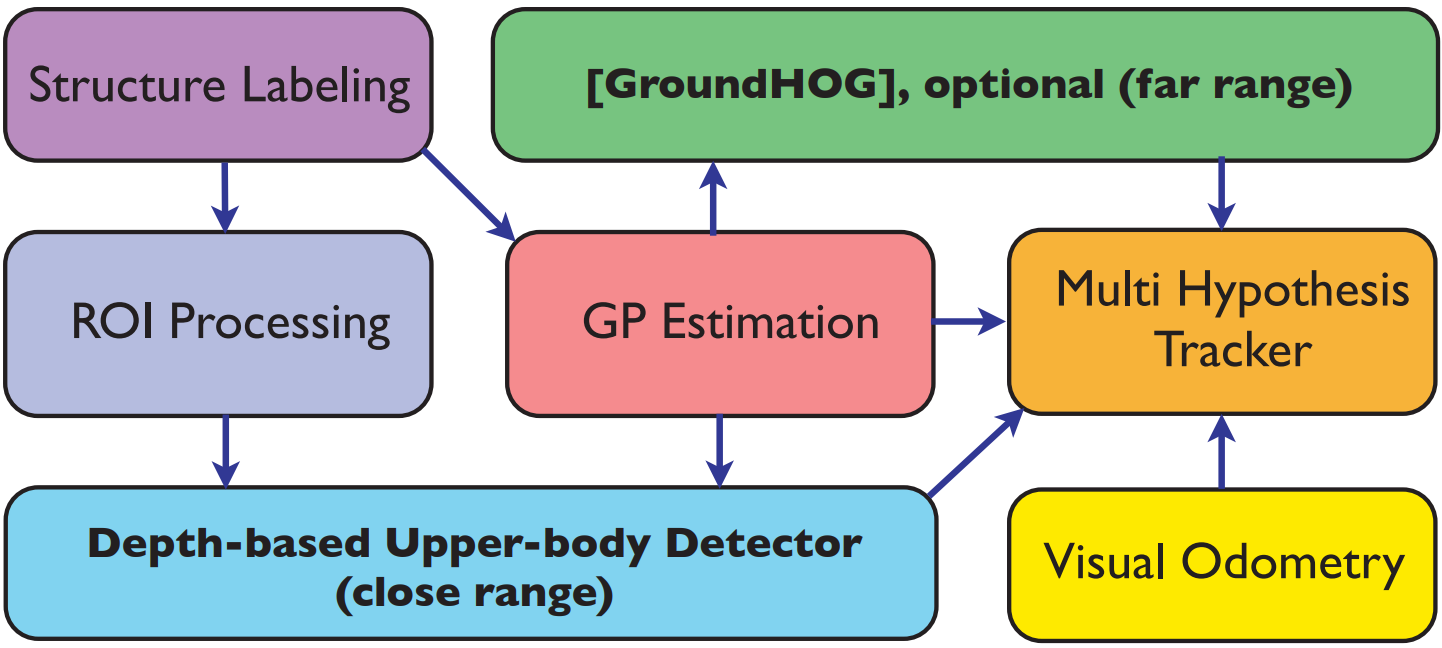

We present a real-time RGB-D based multiperson detection and tracking system suitable for mobile robots and head-worn cameras. Our approach combines RGBD visual odometry estimation, region-of-interest processing, ground plane estimation, pedestrian detection, and multihypothesis tracking components into a robust vision system that runs at more than 20fps on a laptop. As object detection is the most expensive component in any such integration, we invest significant effort into taking maximum advantage of the available depth information. In particular, we propose to use two different detectors for different distance ranges. For the close range (up to 5-7m), we present an extremely fast depth-based upper-body detector that allows video-rate system performance on a single CPU core when applied to Kinect sensors. In order to cover also farther distance ranges, we optionally add an appearance-based full-body HOG detector (running on the GPU) that exploits scene geometry to restrict the search space. Our approach can work with both Kinect RGB-D input for indoor settings and with stereo depth input for outdoor scenarios. We quantitatively evaluate our approach on challenging indoor and outdoor sequences and show stateof-the-art performance in a large variety of settings.

Paper

![[paper]](/projects/figs/jafari_etal_ICRA14.png)

Video

Source Code

[code]